TL;DR — We started experimenting with LLMs the week APIs became available. Early results were rough (hallucinated yFiles calls, missing context). We pushed through with retrieval and tool‑driven approaches, restructured our documentation for machines, and built MCP tools so agents can look up real answers instead of guessing. Today, our Support Center and agentic workflows can answer advanced yFiles questions and even scaffold full apps — and with yFiles for HTML 3.0 plus linters, agents generate working code in one shot.

Why we cared about LLMs from day one

yFiles is a comprehensive library for graph visualization and automatic layouts—built for production‑grade applications. That depth comes with a learning curve, especially for first‑time users and busy teams who need results quickly.

So when the ChatGPT (3.5) API arrived, we asked a simple question: could LLMs shorten the path from intent to a running graph app? We integrated ChatGPT into our internal Mattermost the same week and immediately started code‑generation trials against yFiles for HTML (JavaScript/TypeScript).

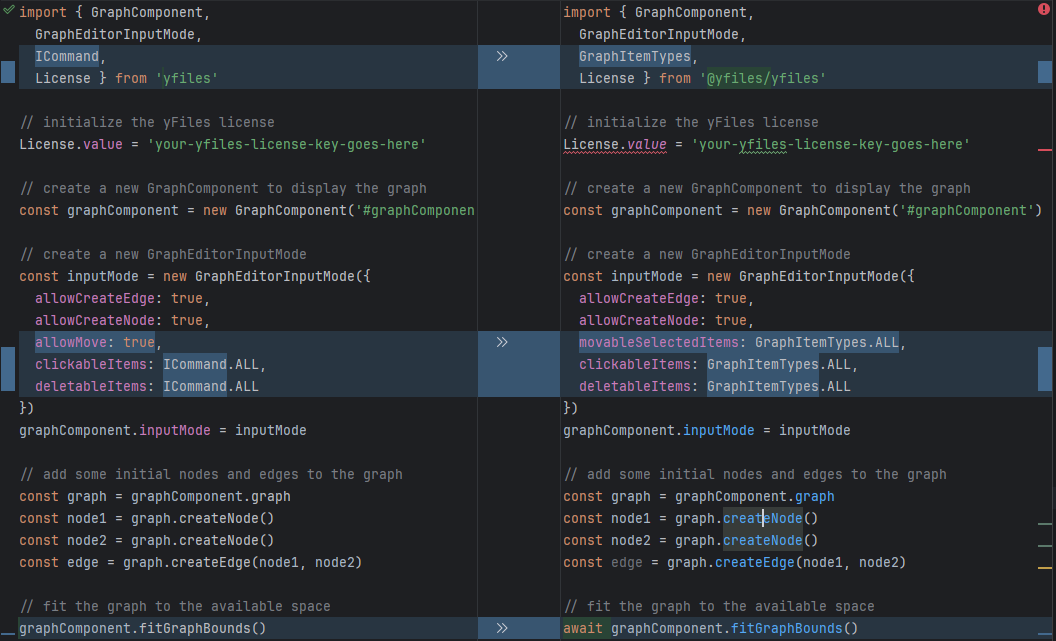

Reality check: early models often hallucinated our API. A typical example: assigning enumeration values to algorithm properties that don’t exist. Half the lines in a snippet could look plausible and still be wrong. We also ran into tiny context windows and no built‑in tool‑calling.

Before it was called RAG: making context the star

Because LLMs hadn’t “seen” much yFiles during training (we’re specialized; not millions of GitHub repos), we realized we’d need to teach them — on the fly.

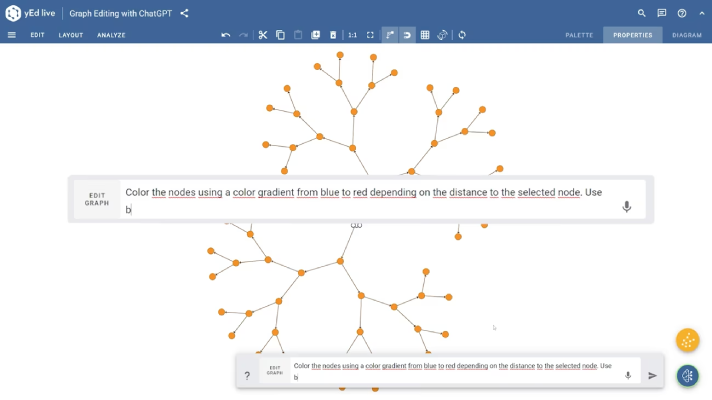

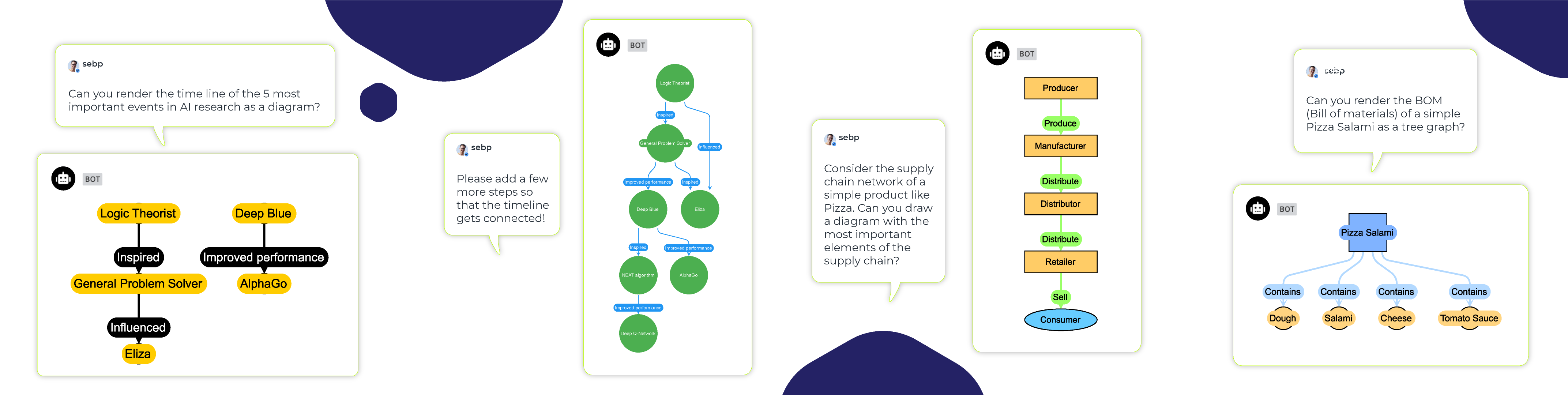

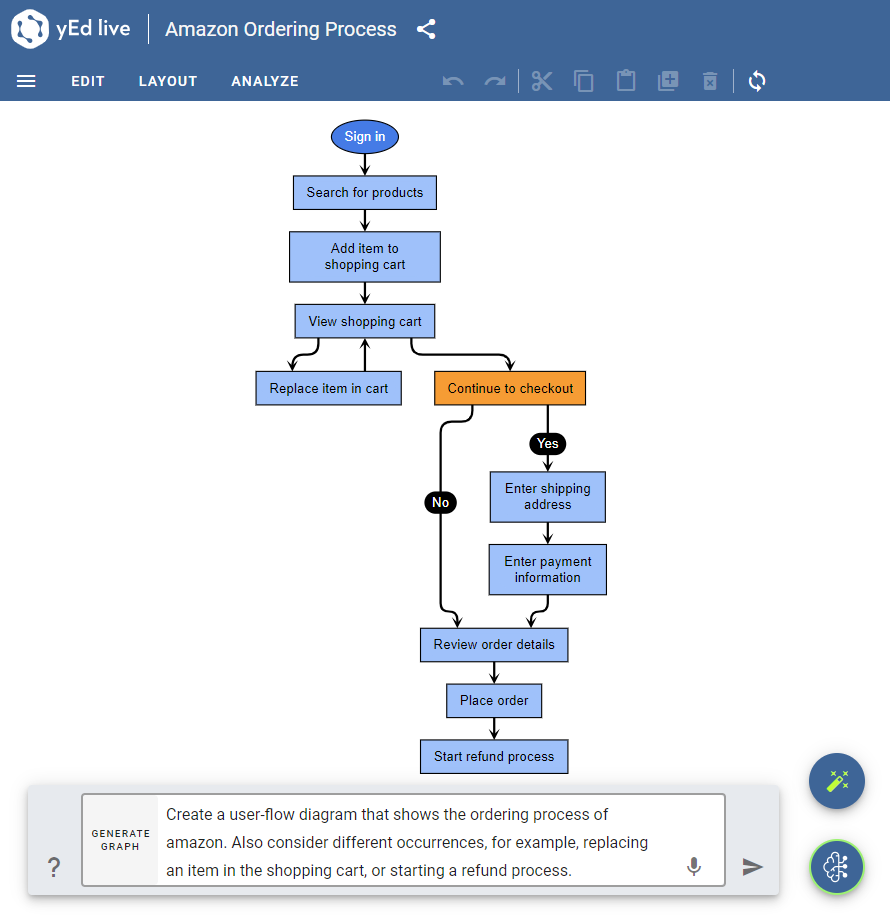

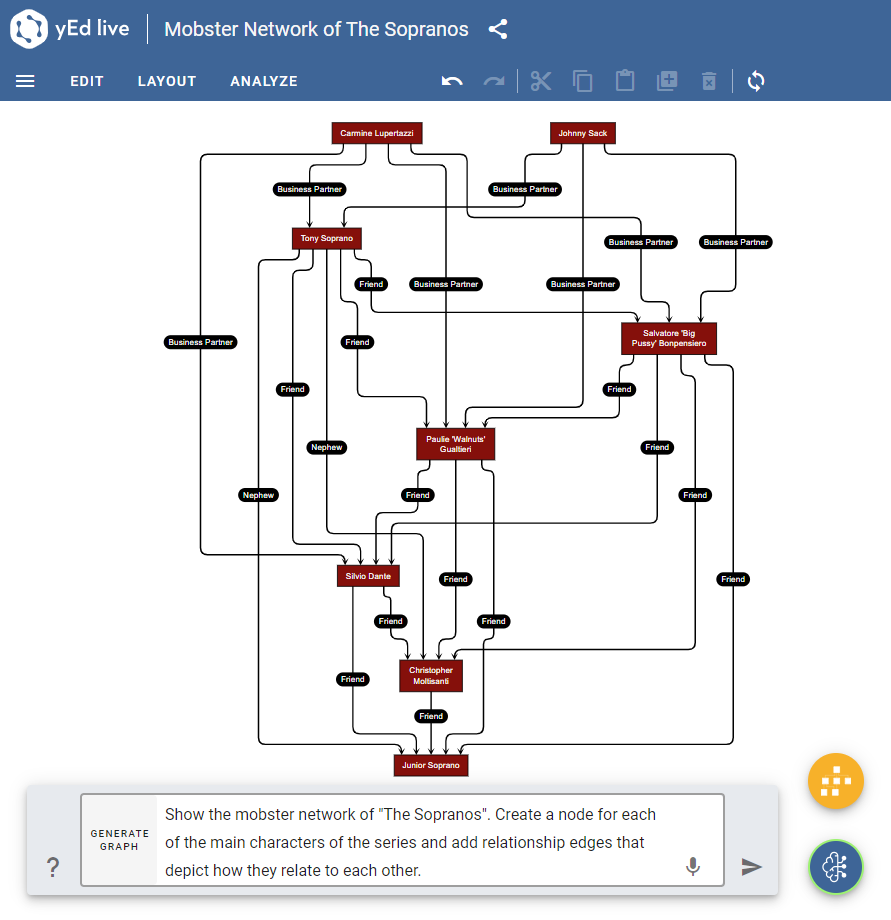

Our early public experiments happened inside yEd‑Live. Users could ask in natural language (and even by voice) for graph edits; behind the scenes the LLM wrote JavaScript and executed it in a browser sandbox with access to the current graph.

To make this work under 2023‑era constraints, we:

- Fed concise API descriptions into prompts,

- Used two‑shot runs (first to decide which API pieces were needed, second to execute with those pieces),

- Parsed raw streams to simulate tool‑calling before it existed,

- Auto‑completed streaming JSON so you could watch the graph populate live — one edge at a time.

It was scrappy — but it worked, and it taught us the lesson we still follow:

The quality of context you give the model matters more than clever prompt tricks.

From demos to real product integrations

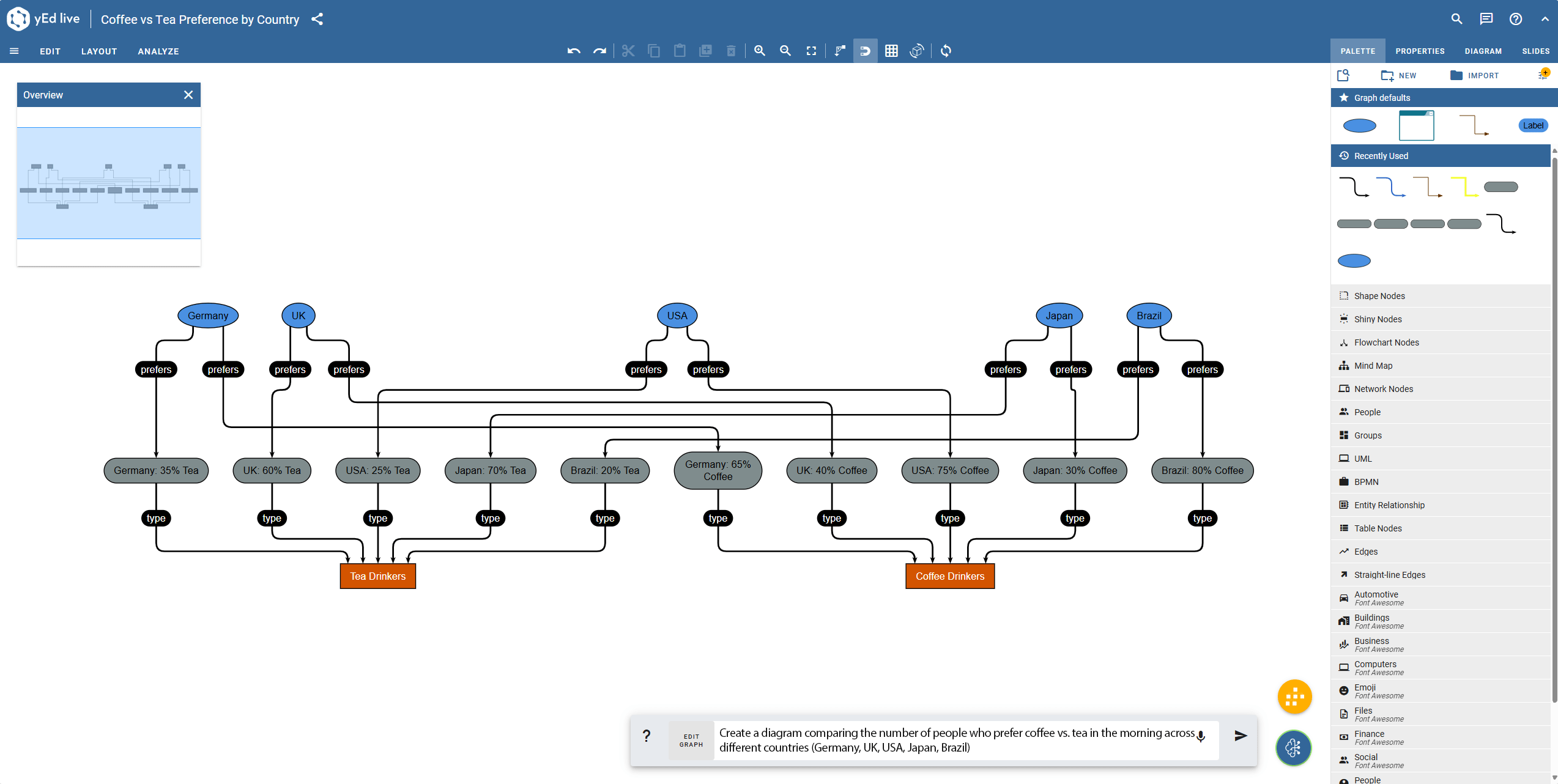

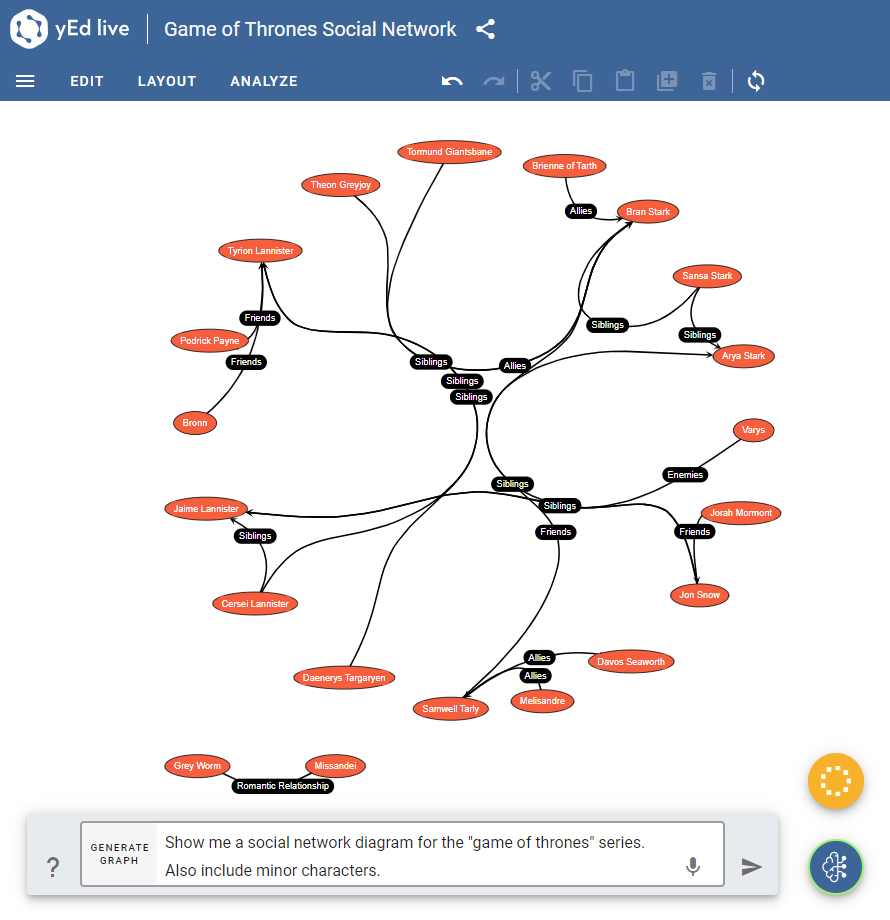

Our innovative prototypes have evolved into essential features within yEd Live and the

The integration process involves sophisticated interactions between user input and AI-driven responses, as depicted in the diagram. This system facilitates effective data analysis by processing inquiries through structured prompts and OpenAI, producing arranged graphs and dynamic visuals. These tools are far from mere prototypes; they are robust applications designed to streamline data exploration and visualization.

yEd‑Live

Voice + natural‑language graph editing to build graphs, add labels, change colors, calculate shortest paths, apply layouts, and more.

Neo4j Data Explorer

Prompt “Load all movies released between 2010 and 2020 as well as their genres” — we infer schema, generate Cypher, run it, and visualize the results.

About Neo4j Data Explorer Try Neo4j Data Explorer

These weren’t toys; they helped users explore data and produce working results faster than paging through a dozen API docs.

The turning point: documentation as machine fuel

We’ve always invested heavily in documentation, API reference, and hundreds of code demos. The breakthrough was to reformat and index that content for machines:

- Curated and chunked docs, code samples, and references to be retrieval‑friendly.

- Implemented vector search across members, descriptions, and sections in the Developer’s Guide.

- Built Model Context Protocol (MCP) tools so an agent can look up real answers, not guess:

- Find an API member by name or description,

- Inspect class hierarchies and interfaces,

- Locate demos that use a given API,

- Retrieve return types and usage patterns,

- Answer “Where do I get an instance of this interface?” with grounded pointers, and more.

With those tools, modern LLMs began answering advanced yFiles questions reliably, because they could query authoritative sources while reasoning.

Why we didn’t put LLMs inside layout algorithms (and what we did instead)

From day one we tested LLMs on algorithmic reasoning. Early models struggled with basic symbolic tasks; even modern ones aren’t great at performing complex calculations without tools. Using them for precise, high‑performance layout computation simply wasn’t appropriate. We decided not to put LLMs in the critical path of layout/algorithms.

Instead, we focused on where LLMs shine:

- Understanding intent (natural language),

- Composing code from building blocks,

- Retrieving the right patterns from our docs and demos,

- Automating the boring parts of scaffolding an app — or migrating from one UI framework to another.

That choice paid off: users interact more naturally, and engineers ship faster, while the core layout engine remains deterministic, robust, and optimized.

And to be clear: this isn’t a pivot to “AI‑only.” It’s a pragmatic expansion of how people can work with yFiles.

Today: Support Center answers and agentic app generation

AI answers in the Support Center

Customers can opt in to receive an AI‑generated answer that uses our MCP tools and curated retrieval.

The agent:

- Identifies relevant API entries and demos,

- Synthesizes an explanation,

- Proposes a minimal working example,

- Links to the exact sections of the docs.

It’s already useful for API questions and simple tasks, and we’re extending it to more complex scenarios.

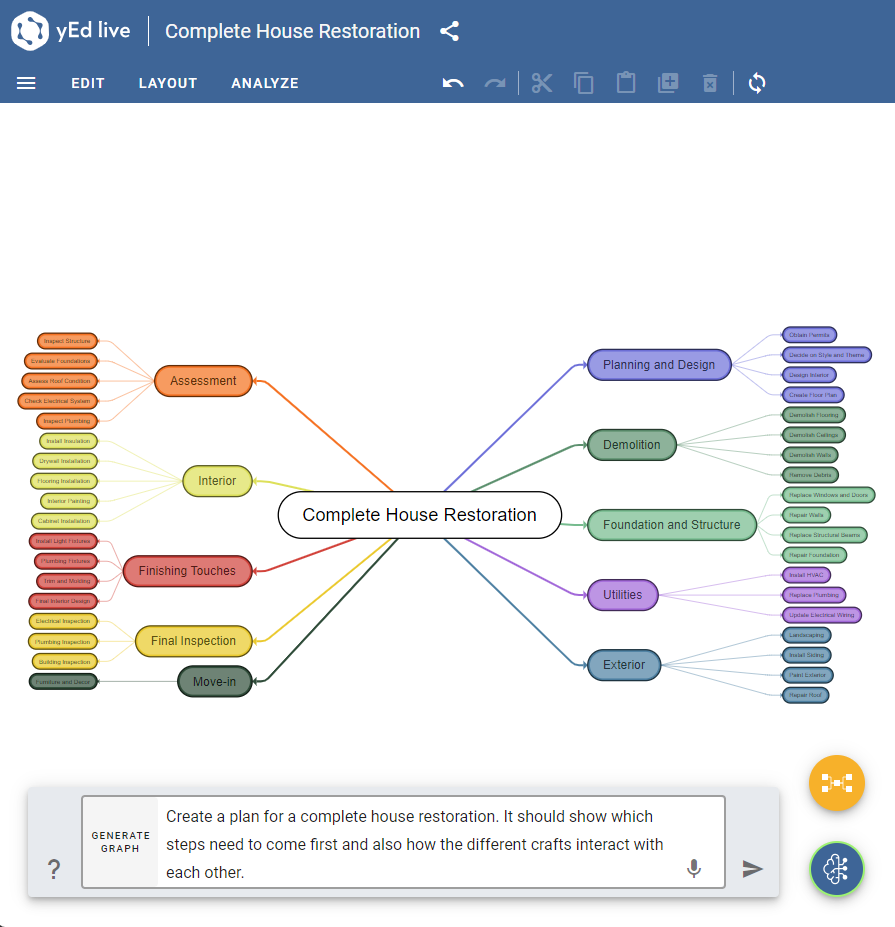

“AI engineer” workflows that scaffold full yFiles applications

Give an agent your JSON dataset, or connect it to a graph database (e.g., Neo4j). The agent:

- Inspects the schema / infers structure,

- Designs a query (if needed),

- Plans node/edge styles and interactions,

- Generates a working TypeScript app with yFiles — often in a single pass,

- Runs our linters to fix common mistakes automatically.

This last step matters because we recently shipped yFiles for HTML 3.0, a major API refresh. LLMs trained on older versions will naturally suggest mostly‑correct‑but‑dated calls. Our linters catch and rewrite those automatically, so the final result targets 3.0 idioms.

In practice, we routinely see agents produce usable starter apps quickly — and at low token cost — even with the more capable models.

What this means for you

- New to yFiles? The learning curve is dramatically lower. Describe intent (“Build a visualization from this supply‑chain JSON and highlight bottlenecks”), and start from a running app instead of an empty editor.

- Leading a team? Agents accelerate prototyping and documentation lookup, so developers focus on domain logic and UX — not boilerplate.

- AI enthusiast? This is a case study in making a specialized commercial library play nicely with LLMs. The secret wasn’t magic prompts; it was high‑quality, machine‑readable context + tools.

A note to skeptics: AI is optional (and the classic path thrives)

We hear you. If you prefer the classic, code‑first approach, you’re in good company — our customers have built successful yFiles applications for more than two decades without AI.

- You don’t have to use AI to get great results with yFiles.

- With yFiles for HTML 3.0, we’ve simplified the API and removed legacy friction. It’s easier for humans than ever.

- Our investment in better docs, examples, and toolability was for humans first. LLMs benefit because they behave like diligent newcomers: they read, search, and compose from examples.

- We’re not changing our business to “be AI.” We’re offering AI‑assisted paths alongside the proven workflows you already know.

If you want to keep working exactly as you do today, you can. If you want to try an AI‑assisted on‑ramp, it’s there when you’re ready.

What´s new in yFiles Version 3 Getting started (without AI)

Where we’re headed

- Richer IDE/CLI integrations: simpler setup so your local agent can “speak yFiles” immediately.

- Deeper agent patterns: planning loops that pull in styles, layout presets, and best practices for specific graph types and data sources (hierarchies, BPMN, supply chain, network topologies; files, databases, Web APIs, etc.).

- Human docs improved by AI learnings: insights we wrote “for the model” turned out to be great for humans; we’re feeding them back into the docs.

- More guardrails: expanding linters and validators so agents produce production‑grade code more consistently and with fewer tokens.

Try it yourself

We’ve published the tools so you can use them with your own agent. The flow is simple:

- Download yFiles via the

yfiles-dev-suiteCLI and log in to the Customer Center. - Enable our MCP tools in your IDE/CLI agent (most popular frameworks and editors supported).

- Point the agent at your data (JSON/CSV or a graph database like Neo4j).

- Ask for the app you want. The agent will retrieve docs/demos, generate code, and even test it automatically.

Prefer the classic route? Jump straight into yFiles 3.0 with updated samples and guides — and enjoy faster, richer support answers from our team, who also benefit from using these tools internally.

Appendix: lessons learned

- You won’t out‑prompt missing knowledge. Invest in retrieval from curated, authoritative sources.

- Small, composable tools beat one mega‑tool. Our MCP tools answer specific, recurrent questions (usage, hierarchy, return types) and provide links to further information.

- Make docs machine‑readable. Chunking, IDs, canonical links, and consistent headings pay off.

- Give agents permission to fix themselves. Linters and formatters close the loop from “almost right” to “actually correct.”

- Don’t force LLMs into roles they’re not good at. Keep deterministic computation where it belongs (e.g., layout algorithms), and use LLMs for intent, composition, and glue.

- Optimize for regular users, too. LLMs emulate diligent newcomers; clearer docs, simpler APIs, and better examples help both humans and models. A win‑win.

The promise of AI in developer tools isn’t that it replaces expertise — it’s that it reduces the distance between an idea and a working first version. For graph visualization, that distance used to be measured in doc pages and boilerplate. With yFiles, our MCP tools, and agentic workflows, it’s now measured in minutes and iterations.

If you’ve ever thought “yFiles looks powerful, but I don’t know where to start,” this is your moment —with or without AI. Choose the path that fits your team: classic, AI‑assisted, or a mix of both.

Further articles about AI, LLM, and network visualization

Test and experience yFiles free of charge!

- Free support

- Fully functional

- 100+ source-code examples